Introduction

Hello world! Welcome to my Stable Diffusion guide. The idea of this page is to guide people of every level into using my models and LoRA in the most effective way.

About Medium

My articles are written on a platform named Medium. When you read articles from that site you've access only to 3 monthly articles for free. If you want to read more you can:

- Subscribe to Medium using my referral link: https://medium.com/@inzaniak/membership. This helps me and support an awesome platform. ❤️

- Search on Google for interesting ways to bypass this limitation

Support ❤️❤️❤️

If you want to support me, right now you have the following options:

Free

- Follow me on Instagram: (@inzaniak_aiart) | Instagram

- Follow me on Deviantart: Inzaniak User Profile | DeviantArt

- Leave a review or your artworks under my Models and LoRAs!

Pay what you want

- Buy my music: Music | Inzaniak (bandcamp.com)

Subscription

- Subscribe to Medium using my referral: https://medium.com/@inzaniak/membership

- Subscribe on my Deviantart: Inzaniak User Profile | DeviantArt

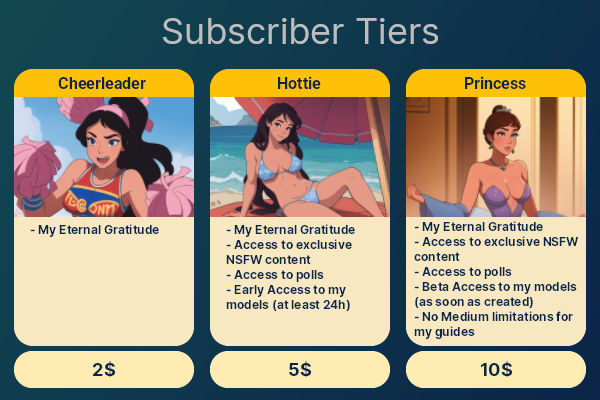

- 3 Different tiers on my [Deviantart](Inzaniak User Profile | DeviantArt):

- Cheerleader (2$): No rewards, just my gratitude ❤️

- Hottie (5$): Access to NSFW content, Polls, and Early Access to my models (at least 24h before than CivitAI users)

- Princess (10$): All the previous plus: Beta access to my models (you'll have access as soon as I've finished training the LoRA), no Medium guide limitations and access to multiple versions of the same LoRA.

Guides

In this section you'll find all the guides I've published divided by difficulty.

Beginner

Setup

If you are a complete Stable Diffusion beginner, the first thing you'll need to do is to install the AUTOMATIC1111 UI. To do this i recommend you checking out my step-by-step tutorial on Medium:

Stable Diffusion Ultimate Guide pt. 1: Setup | by Umberto Grando | Mar, 2023 | Medium

In this tutorial you'll learn:

- What is Stable Diffusion

- How can you use SD

- The requirements

- Step-By-Step setup of the requirements and UI

- Installing a model

- Basic usage

Prompting

Once you've completed the setup, it's time to learn the secrets of prompting. The SD UI supports a series of commands that not everybody knows. Luckily for you I've written an article that explains everything step-by-step:

Stable Diffusion Ultimate Guide pt. 2: Prompting | by Umberto Grando | Mar, 2023 | Medium

In this article, you'll learn:

- Basic prompting: how to use a single prompt to generate text, and how to evaluate its quality and stability.

- Changing prompt weights: how to adjust the importance of each prompt keyword in relation to the others.

- Prompt Editing: how to change the number of steps that the model takes for a specific prompt.

- Prompt order: how to order your prompts according to their relevance and impact.

- Prompt matrix: how to use a matrix of prompts to test different variations in a single run.

Intermediate

Creating Higher Resolution Pictures

Now that we know all the basics we need to learn how to create higher resolution images. SD is trained on 512x512 pictures, so trying to create big pictures usually results in poor quality or a lot of confusion. To do this we need to generate a smaller picture and then upscale it and re-generate:

Stable Diffusion Ultimate Guide pt. 3: High Resolution | by Umberto Grando | Mar, 2023 | Medium

In this tutorial you'll learn:

- Upscaling an image

- Using img2img to generate a new image starting from an input

- Fixing an image using Krita

- Working with distorted outputs

Inpainting

Sometimes you get an image that is 90% awesome and 10% horrible, that's when the inpainting tool come to the rescue. Check out my tutorial at:

Stable Diffusion Ultimate Guide pt. 4: Inpainting | by Umberto Grando | Apr, 2023 | Medium

In this tutorial you'll learn:

- The inpainting interface

- Clearing parts of the image

- Fixing objects

- Fixing eyes

- Fixing hands

- Adding details

ControlNet

One of the problems you'll encounter when working with Stable Diffusion is the lack of controllability from the models. It's difficult to achieve a specific pose or add to a picture an object that SD doesn't know. Luckily with ControlNet you can achieve this easily.

You can check out my tutorial here:

Stable Diffusion Ultimate Guide pt. 5: Controlnet | by Umberto Grando | May, 2023 | Medium

In this tutorial you'll learn:

- What's a ControlNet

- How to installl the ControlNet extension

- How to download the ControlNet models

- Using the ControlNet Extension

Updated Workflow

In this article you'll find my updated workflow to generate high-quality pictures using my custom models. Check out my tutorial at:

Stable Diffusion Ultimate Guide pt. 6: Workflow | by Umberto Grando | Jun, 2023 | Medium

In this tutorial you'll learn:

- My complete workflow

- How I upscale pictures adding details

- How I use Krita to fix imperfections

- How I change the overall composition of a picture

FAQ

Models and LoRAs

My output looks gray

If your output looks like the image above you are not using a compatible VAE. You can install the one I'm currently using from here:

https://huggingface.co/iZELX1/Grapefruit/blob/main/Grapefruit.vae.pt

Once you've installed it (by saving it into the VAE folders of the UI), you need to go to the settings and under Stable Diffusion select the correct VAE.

I've used the same settings as you, but my result is completely different

If your pictures look less detailed when compared to mine the problem is probably the fact that you are not following a strong workflow. I've detailed mine in a step-by-step guide here:

Stable Diffusion Ultimate Guide pt. 3: High Resolution | by Umberto Grando | Mar, 2023 | Medium

How do you train your LoRAs?

Right now I don't have a specific workflow for creating LoRAs. I use the bmaltais/kohya_ss (github.com) GUI to create all my LoRAs.

The setting I (usually) use are:

- Epoch: 8

- mixed/save precision: fp16

- Learning Rate: 0.001

- LR Scheduler: cosine with restarts

- LR warmup: 10%

- Network rank (dimension): 8

- Network alpha: 4 (sometimes 16,32,64)

- LR Number of Cycles: 12

- The rest of the settings are left as default

My image looks oversaturated

If your image looks like the one above (with high saturation), you are probably using a high CFG value. To fix it you need to lower that value. The correct value changes from checkpoint to checkpoint, for example my Ruby model usually works with a value between 3 and 4 while my Anime model works well with a value of 6 or 7.

Models and LoRAs

Models and LoRAs are listed on CivitAI:

Creator Profile | Civitai